If you were to believe the above tweet you may think “Whats the point, we’re doomed by this anti-forensic stuff”. No offence to Brent Muir, but I think differently.

A few weeks ago I attended Shmoocon and sat in the presentation by Jake Williams and Alissa Torres regarding subverting memory forensics. As I sat through the talk I kept thinking to myself that it would be impossible to completely hide every artifact related to your activity, which Jake also stated in his presentation. Seeing a tweet the other day that had a link to download the memory image, I quickly grabbed it to see what I could find (If you are interested in having a look at the image it can be found here). I will also state that I have not looked at anything else that was posted as I didn’t want to have any hints on what to look for.

My first course of action once I had the memory dump was to figure out what OS I was dealing with. To find this I used volatility with the imageinfo plugin.

We can see from the above output we have an image from a Windows 7 SP1 machine. My next step is to create a strings file, grabbing both ascii and unicode strings, of the memory image.

Now that I had all the data I needed I could begin my investigation.

Whenever you are given a piece of data to analyze it is usually given to you for a reason. Whatever that reason is, our first course of action should be to find any evidence that will either confirm or deny. I know based on the presentation that the purpose of the tool was to hide artifacts from a forensic investigator. Knowing this, I set out to find any evidence that will validate these suspicions.

I first began by looking at the loaded and unloaded drivers since the tool will most likely need to be interacting with the kernel to be able to modify these various memory locations. I wanted to see if there was any odd filenames, odd memory locations or filepaths and used the volatility plugins modscan and unloadedmodules for this. This unfortunately led me to a dead end.

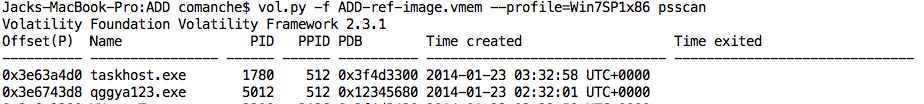

My next course of action was to take a look at the processes that were running on the machine. I compared the output from pslist and psscan as a way to look to look for processes in different ways and to note any differences. The pslist plugin will walk the doubly-linked list of EPROCESS structures whereas the psscan plugin will scan for EPROCESS structures. My thought was that if a process had been hidden by unlinking itself, I would be able to identify it by comparing the 2.

By diff’ing the 2 files I was able to see something odd in the psscan output.

We can see that qggya123.exe definitely looks odd. If we look for our odd process name using this plugin it appears that the process may no longer be running as there is no end time listed in the output, or this may simply be related to Jake’s tool.

The psxview plugin will attempt to enumerate processes using different techniques. The thought being that if you attempt to hide a processes you will have to evade detection a number of different ways. Looking at the output below, the only way we are able to see that there was this suspicious looking process running (at least at one point in time) was by scanning for these EPROCESS structors.

Now that we have something to go off of, my next step was to see if I could gain a little more context around the process name. The PID of our suspicious process is 5012 with a PPID of 512. If we look to see what PID 512 is, we can see that it was started under services.exe.

Naturally I will want to see what services were running on the machine. For this I used the svcscan plugin, but was unable to locate our suspicious filename.

The next step I took was to search for the filename in the memory strings. The output below shows the file being executed from the command line by a file named add.exe. We also see that our PID and PPID are included as a parameter. Now that we know how it was executed we have an additional indicator to search for.

Searching for add.exe I was able to see several different items of interest.

1. add.exe with the /proc parameter was used to execute 3 different files. qggya123.exe, cmd.exe and rundll32.exe

2. qggya123.exe is the parent of cmd.exe

3. cmd.exe is the parent of rundll32.exe

4. The /file parameter I assume is used to hide the presence of various files in the /private directory

5. The /tcpCon command was used to specify tcp connections given src ip (decimal) src port dst ip (decimal) dst port and I’m guessing the final 4 is indicating IPv4

1885683891 = 112.101.64.179

1885683931 = 112.101.64.219

1885683921 = 112.101.64.209

1885683931 = 112.101.64.219

1885683921 = 112.101.64.209

If we look around those strings we are able to see even more of the malicious activity.

I did try to dump these files from memory, but was unable to. I also attempted to create a timeline in an attempt to identify sequence of activities, but was unable to locate the activity I was looking for.

One item that would be a top priority at this point would be to identify all the port 443 connections generated from this host. Based on the output from netscan we can see the known malicious connections were established, but we still need to verify that these are the only ones. We would also want to be able to look at any pcap associated with these connections in an attempt to identify what data was transferred as well as possibly creating some network detection.

Knowing that there is some type of process / file / network connection hiding and the fact that I’m not able to analyze the memory image as I would typically expect to be able to, I would most likely request a disk image at this point. Even with the anti forensics tactics that were employed, I was able to determine enough to know that this machine is definitely compromised as well as some of the methods being used by the attacker. I was also able to generate a list of good indicators that could be used to scan additional hosts for the same type of activity.

In case anyone is interested I created a yara rule for the activity identified.

rule add

{

strings:

$a = “p_remoteIP = 0x”

$b = “p_localIP = 0x”

$c = “p_addrInfo = 0x”

$d = “InetAddr = 0x”

$e = “size of endpoint = 0x”

$f = “FILE pointer = 0x”

$g = ” /tcpCon ”

$h = “Bytes allocated for fake Proc = ”

$i = “EPROC pool pointer = 0x”

$j = “qggya123.exe”

$k = “add.exe” wide

$l = “c:\\add\\add\\sys\\objchk_win7_x86\\i386\\sioctl.pdb”

$m = “sioctl.sys”

$n = “\\private”

condition:

any of them

$b = “p_localIP = 0x”

$c = “p_addrInfo = 0x”

$d = “InetAddr = 0x”

$e = “size of endpoint = 0x”

$f = “FILE pointer = 0x”

$g = ” /tcpCon ”

$h = “Bytes allocated for fake Proc = ”

$i = “EPROC pool pointer = 0x”

$j = “qggya123.exe”

$k = “add.exe” wide

$l = “c:\\add\\add\\sys\\objchk_win7_x86\\i386\\sioctl.pdb”

$m = “sioctl.sys”

$n = “\\private”

condition:

any of them

}

This was a quick analysis of the memory image and am still poking around at it. As I find any additional items I’ll update this post.

Update 1:

Running the driverscan plugin, scanning for DRIVER_OBJECTS, we can see something that looks a little suspicious.

After seeing this I ran the devicetree plugin to see if I was able to get an associated driver name. Based on the output below, it looks like our malicious driver is named sioctl.sys.

So now that we have a driver name, lets see if we can dump the driver.

Looking at the .pdb path in the strings output we can see that we have our malicious driver.

Update 2:

An additional piece of information I found while running filescan, looking for the files called by the execution of add.exe, was the file path returned by volatility. All the paths appear to have \private as the root directory. I suspect this could be a great indicator for identifying compromised machines.

An additional piece of information I found while running filescan, looking for the files called by the execution of add.exe, was the file path returned by volatility. All the paths appear to have \private as the root directory. I suspect this could be a great indicator for identifying compromised machines.

I would expect to see filepaths that have the root of \Device\HarddiskVolume1\

I verified this by locating every file that has \private in the filepath and all files that were returned were those files that appear to be faked.

Update 3:

One of the cool things we can do with volatility is run our yara rules against the memory image. When a hit is found it will display the process in which the string was found. The syntax we would use to scan the image with our yara rule is:

One of the cool things we can do with volatility is run our yara rules against the memory image. When a hit is found it will display the process in which the string was found. The syntax we would use to scan the image with our yara rule is:

We can see that all of the command line input and output is located in the vmtoolsd process. I limited the output for brevity.

We also see 2 different processes where add.exe was found.

If we compare what we found with the strings plugin we see one difference that we were unable to find with the yara plugin. By mapping the string of our original suspicious file back to a process we see that it’s located in kernel space.

We can also take the strings from the binary and see where those map back to as well.

Had we been unable to locate the driver with driverscan and device tree we still may have been able to identify the file based on the string found.

Update #4:

The awesome people over at volatility were kind enough to send me an unreleased plugin to demonstrate the faked connections that ADD.exe created. I’m told the plugin will be coming out with their new book. The netscan plugin uses pool scanning so it may find objects that were faked like we have already seen. The new plugin works against the partition tables and hash buckets that are found in the kernel. I’m sure MHL can explain it much better then I just did

The awesome people over at volatility were kind enough to send me an unreleased plugin to demonstrate the faked connections that ADD.exe created. I’m told the plugin will be coming out with their new book. The netscan plugin uses pool scanning so it may find objects that were faked like we have already seen. The new plugin works against the partition tables and hash buckets that are found in the kernel. I’m sure MHL can explain it much better then I just did

If we look at the normal netscan output we are able to see the faked established connections.

Now if we use the tcpscan plugin we can see the difference (or not see the difference)

Valid established connections would look like this with the tcpscan output.

As you can see we were able to find additional artifacts related to the add.exe tool by comparing the output of the 2 plugins.

Again thank’s to the volatility team for floating me the plugin to use for this blog post as well as all of their research and work going into the tool and moving the field of memory forensics forward!