I've seen people on twitter refer to hunting as more of a pre detection action than a completely separate process. Granted, there are often times when hunting will lead to new detections, but I don't necessarily think that should always be the goal or even the focus. I believe more that hunting should produce insights into your data and this hunting process is defined with a goal of surfacing unknown, malicious activity. I also don't believe that the results of a hunt should always lead to a true positive or false positive (this is where the pre detection thought comes in), but instead, provide enough information to act, watch or discard.

Over the past few years I've spent a lot of time creating capabilities around categories or stages of threats and have really found great success in this. In building Jupyter notebooks that focus on these categories or stages you have the ability consume, enrich and output data in ways that will allow you to gain insights that you may not otherwise be able to see. Something that I learned along the way was not to let my knowledge or current tool/data set at that point in time be the limiting factor, but that my ability to hunt often hinged on my willingness to add additional methods, sources of data or even people with a particular expertise.

These past few months I've been working toward a methodology around hunting for social media targeting. I initially wanted to be able to distinguish normal interactions vs the anomalous ones. As I started working with this more and more though, there were several key decision points that needed to be answered over and over, once suspicious profiles have been identified. Things like... Does the profile send connection requests to multiple employees with diverse job roles or do they send connection requests to a few people that are similar? Does the profile follow up connection accepts with messages? Are there multiple messages between a fake profile and an employee? What is the job title of the fake profile? Would the job title seem to entice a user into further interactions?

The biggest hurdle though is being able to identify fake profiles. While I'm not going to go into the types of data we're collecting, I will say that the more visibility you have into web based traffic, the better your collection will be. You can also do a lot with simple email parsing though. An email notification for a connection request or a message will include a link with the profile name as well as a link to the profile image. You may also be surprised at the percentage of people that have their work email linked to their account.

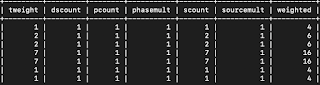

The first stage of identification is looking for anomalous interactions. I calculate statistics around how many users the profile interacted with. How many interactions between a user and a profile. How many distinct interactions were there and what is the span of time between user and profile. I then run these numbers through an isolation forest to highlight outliers. These results are basically eyeballed to see if anything stands out.

By labeling nodes we can start grouping by labels. You may have noticed in the screenshot for the initial insert, a label "jobtitle" was added. Visually you would be able to see if similar job roles were being targeted or if you want to look at maybe all engineers that had interactions with fake accounts. Below is a graph of interactions with fake accounts within a 7 day period.

Being able to also pull data back into the notebook based on labels and relationships brings additional opportunities for further enrichment and analysis. The following query would retrieve interactions for the stated profile.

data_list = tx.run("""match (r)-[KNOWS]-(p) where (r)-[KNOWS]-(p {name: 'fake-profile-123456'}) return r,p""").data().

One of the enrichments I've done is retrieving users's based off of interactions with watched accounts and bring all of the detections each identified user has had in the past 'x' number of days into the notebook.

The notebook does so much more and the capability here is obviously not something you can write detection for, but is rather an environment to hunt for a specific type of threat in. This allows us to hunt at scale without needing several people spending hours a day. It also allows us to pivot in ways that you simply wouldn't be able to do otherwise.

This is the result of shared work and I would personally like to thank the people that know way more than I do about social media targeting as well as those that helped with the GAN detection model. You know who you are.