If you subscribe to

the notion that a user, who is intent on stealing data from your org, will

require a change in their behavior. Then

identifying that change is critically important. As this change happens, they will take

actions that they have not previously taken.

These actions can be seen as anomalies and is where we want to identify

and analyze their behavior.

I've been studying

insider IP theft, particularly those with a connection to China, for a number

of years now. I feel that, in a way,

this problem mimics the APT of 10 years ago.

Nobody with exposure to it is willing to talk about the things that work

or don't. This leaves open the

opportunity for this behavior to successfully continue. While I'm not going to share specific

signatures, I would like to talk about the logic I use for hunting. This is my attempt to generate conversation

around an area that, in my opinion, can't be ignored.

Just as in network

intrusions, there are phases that an insider will likely go through before data

exfil occurs. But unlike network

intrusions, these phases are not all required as the insider probably has all

the access they need. They may perform

additional actions to hide what they are doing.

They may collect data from different areas of the network. They may even

simply exfil data with no other steps.

There are no set TTP's for classes of insiders, but below are some

phases that you could see:

Data Discovery

Data Collection

Data Packaging

Data Obfuscation

Data Exfil

I've also added a

few additional points that may be of interest.

These aren't necessarily phases, but may add to the story behind their

behavior. I'm including it in the phase category

for scoring purposes though. The scoring

will be explained more below. The

additional points of interest are:

Motive - Is there a

reason behind their actions?

Job Position - Does

their position give them access to sensitive data?

Red Flags - e.g.

Employee has submitted 2 week notice.

By assigning these

tags, behaviors that enter multiple phases suddenly become more

interesting. In many cases, multiple

phases such as data packaging -> data exfil should rise above a single phase

such as data collection. This is because

a rule is only designed to accurately identify an action, regardless of

intent. But by looking at the sum of

these actions we can begin to surface behaviors. This is not to say that the total count of a

single rule or a single instance of a highly critical rule will not draw the

same attention. It should, and that's

where rule weighting comes in.

Weighting is simply

assigning a number score to the criticality of an action. If a user performs an action that is being

watched, a score is assigned to their total weight (weighted) for the day. Depending on a user's behavior, their

weighted score may rise over the course of that day. If a user begins exhibiting anomalous

behavior and a threshold is met, based on certain conditions, an alert may

fire.

An explanation of

alert generation. My first attempt at

this was simply to correlate multiple events per user. As I developed new queries the number of

alerts I received grew drastically.

There was really no logic other than looking for multiple events which

simply led to noise. I then sat down and

thought about the reasons why I would want to be notified and came up with:

User has been

identified in multiple rules + single phase + weight threshold met (500)

User has been

identified in multiple phases + weight threshold met (300)

User exceeds weight

threshold (1000)

To describe this

logic in numbers it would look like:

|where ((scount >

1 and TotalWeight > 500) OR (pcount > 1 and TotalWeight > 300) OR

(TotalWieght > 1000))

By implementing

those 3 requirements I was able to eliminate the vast majority of noise and

began surfacing interesting behavior. I

did wonder what I may be missing though. Were there users exhibiting behavior that I would potentially want to

investigate? Obviously my alert logic

wouldn't identify everything of interest.

I needed a good way to hunt for threads to pull, so I set about describing behaviors in

numbers. I wrote about this a little in

a previous post http://findingbad.blogspot.com/2020/05/its-all-in-numbers.html,

but I'll go through it again.

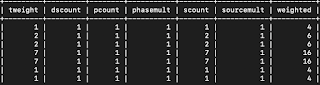

Using the existing

detections rules I used the rule name, weight and phase fields to create

metadata that would describe a user's behavior.

Here's the fields I created and use for them:

Total Weight - The

sum weight of a user's behavior.

Distinct rule count

- The number of unique rule names a user has been seen in.

Total rule count -

The total number of rules a user has been seen in.

Phase count - The

number of phases a user has been seen in.

Knowing that riskier

behavior often involves multiple actions taken by a user, I created the

following fields to convey this.

Phase multiplier -

Additional value given to a user that is seen in multiple phases. Increases for every phase above 1.

Source multiplier -

Additional value given to a user that is seen in multiple rules. Increases for every rule above 1.

We then add Total

Weight + Total rule count + Phase multiplier + Source multiplier to get the

users weighted score.

By generating these

numbers we can, not only observe how a user acted over the course of that day,

but also surface anomalous behavior when compared to how others users

acted. For this I'm using an isolation

forest and feeding it the total weight, phase count, total rule count and

weighted numbers. I feel these values

best describe how a user acted and therefore are best used to identify

anomalous activity.

I'm also storing

this metadata so that I can:

Look at their

behavior patterns over time This particular user was identified on 4 different days:

Compare their sum of

activity to other anomalous users. This

will help me identify the scale of their behavior. This user's actions are leaning outside of normal ptterns:

I can also look at

the daily activity and compare that against top anomalous users or where they

rank as a percentage. You can see on the plot below that the user's actions were anomalous on a couple of different days.

There are also a

number of other use cases for retaining this metadata.

This has taken a lot

of time and effort to get to this point and is still a work in progress. I can say though that I have found this to be

a great way to quickly identify the threads that need be pulled.

Again, I'm sharing

this so that maybe a conversation will begin to happen. Are orgs hunting for insiders, and if so,

how? It's a conversation that's long

overdue in my opinion.